Searching for truth in the age of misinformation

February 2, 2023

During the height of the pandemic, the American media landscape saw a flurry of conspiracy theories spring up, categorizing COVID-19 as either a hoax or a bioweapon that governments deliberately conspired to create.

Similarly, on Jan. 6, 2020, when a mob of impassioned Trump supporters attempted to “stop the steal,” they did so under the pretext that a hidden cabal stole the presidential election, a narrative inflated by conspiracy theories regarding a secretive “deep state” committed to undermining America’s democratic process.

At first glance, these issues may seem worlds apart. One is a matter of public health, and the other is a crisis of faith in the American electoral college system. However, they have one thing in common: roots in a growing crisis in media literacy.

Media literacy is the ability to proficiently decode the meaning behind online content, such as memes, news stories, social media posts and more. Internet users with high media literacy are less likely to fall for online hoaxes, misinformation or deliberate lies and disinformation, as they are spotting signs of incorrect information and making decisions about how much they believe online content.

When misinformation or disinformation spreads, there are real people affected by it. Conspiracy theories aimed at people of color, members of the LGBTQ community, women and beyond affect how people vote in elections, how they treat others and how they spread disinformation. Media literacy education, which has become more popular over recent years, is often the next step in trying to stop conspiracy theories and hoaxes.

For example, since early 2020, politicians and voices from the far-right have started calling LGBTQ people “pedophiles,” which has caused a spike in hate crimes and assaults of members of the LGBTQ community.

According to a study in Statista, more than 38% of Americans have accidentally shared news that was fake or misinformed, and only 26% are very confident in their ability to spot misinformation.

Fears over media literacy were especially brought to the public’s attention during the 2016 election when Cambridge Analytica, a consulting firm in data collection, was exposed for selling Facebook user data to political campaigns. This exchange, as the accusation went, worked to deepen the polarization of American political culture and caused a schism that moved voters into radically different political worlds.

The crisis of internet-based polarization has fostered the emergence of political bubbles on the internet, wherein participants only consume political content that reinforces their ideological biases. These internet bubbles serve as a half subculture and half political organization, giving rise to a host of conspiracy theories that had previously resided in relative obscurity.

The introduction of once-fringe conspiracy theories into mainstream discourse carries with it grave implications for the health of American democracy. From climate denialism to lies about the Black Lives Matter Movement committing fraud, conspiracy theories have become a powerful tool in obstructing the advancement of environmental, racial and economic justice.

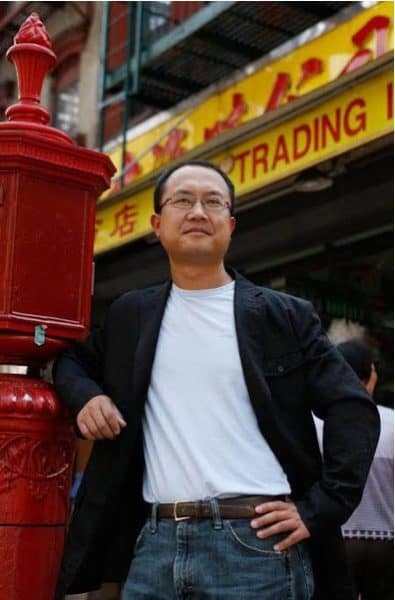

A.J. Bauer, a professor specializing in conservative news and right-wing media, said these “modern” conspiracy theories are, in many ways, new in form but old in substance, particularly in relation to the American right-wing.

“There’s a long history of conspiratorial thinking on the right. It isn’t really that new. If you look back, for example, to the beginning of the modern conservative movement in the late 1940s and early 1950s, there were a lot of conspiracy theories about Communist subversion,” Bauer said.

Bauer mentioned how the conservative movement of the 20th century wielded conspiracy theories to label Martin Luther King Jr. as a communist to discredit the broader civil rights movement.

Bauer also spoke to recent developments in how the conspiratorial thinking of the 21st century works differently from past generations. More specifically, he described the inner workings of QAnon, a conspiracy theory first formulated on far-right message boards that mythologized the Trump administration as a force engaged in an abstruse struggle against America’s secret elite.

“I think what makes QAnon different is that it’s like an omnibus conspiracy theory. It’s looping in a whole bunch of random conspiracy theories over time. Everything from Communist subversion to anti-Semitic troops about blood libel,” Bauer said. “These are all theories with their own discrete histories. And another difference is it isn’t a top-down conspiracy theory where you have a leader who’s saying, ‘Here’s what’s going on secretly’ and riling the grass-roots. It’s almost kind of a participatory conspiracy theory.”

If Bauer’s analysis is correct, and QAnon is more like an “omnibus theory” that is more grassroots than top-down, it would seem to coincide with the internet making media more fragmented than ever. Unlike past generations, there are no longer unifying voices to represent the media, such as Walter Cronkite or Barbara Walters, to help build a common truth. Therefore, it has become imperative for regular people to pierce through webs of misinformation.

Jiyoung Lee, an assistant professor in the College of Communication & Information Sciences, researches misinformation and human-computer interaction. Lee is at the forefront of tackling new sets of problems introduced by the advent of the internet, working on models to understand how misinformation spreads through public discourse.

“Students should always double-check where messages are coming from, who spreads these messages, and whether the source of the message is reliable or not,” Lee said. “But in addition to that, related to what I am doing my research about, like synthetic media, when we think about deep fakes, it is difficult for us to simply just be educated.”

Deep fakes are the newest development in the spread of fake news. With the use of deep learning artificial intelligence, agents of disinformation can digitally alter the image of someone, often a celebrity or politician, to portray them saying or doing things that they never said or did.

Lee brought up how the development of new technologies often enables disinformation vectors to convince audiences into believing distortions of the truth or outright lies. To this end, Lee offered different tips for students at the University on navigating the growing tide of virtual deceptions.

“We’re normal people. It is super difficult to see whether deep fake, video-based misinformation is true or not,” Lee said. “Instead of being passive observers toward this phenomenon, [students] should take an active role in understanding what things are going on in our society, what are some popular narratives that are being spread on social media these days so that they can simply be aware of what’s going on and then behave in a more active way.”

Despite all attempts to the contrary, misinformation is here to stay. With new technology comes new problems, and experts are now looking at how to adapt instead of trying to reverse the clock. Any hope of social progress in a democratic society hinges on the ability of a well-informed public to understand the world around them accurately.

In order to regain some semblance of normalcy in the age of misinformation, students and academics are looking to play a more active role in combating the spread of fake news. Modern problems require modern solutions, and adapting to an ever-changing media landscape will continue to be an important challenge.